How to Upload Single Or Multiple Files to S3 Bucket in Node.js 2023

In this article, we gonna learn how to upload single/multiple files to s3 bucket in node.js (express).

1. Let's create a new express project using express generator.

npm i -g express-generator

express node-s3-upload --no-viewcd node-s3-upload2. Install multer and multer-s3 npm packages.

npm i multer multer-s3Multer adds a body object and a file or files object to the request object. The body object contains the values of the text fields of the form, the file or files object contains the files uploaded via the form.

Ref:- https://www.npmjs.com/package/multer

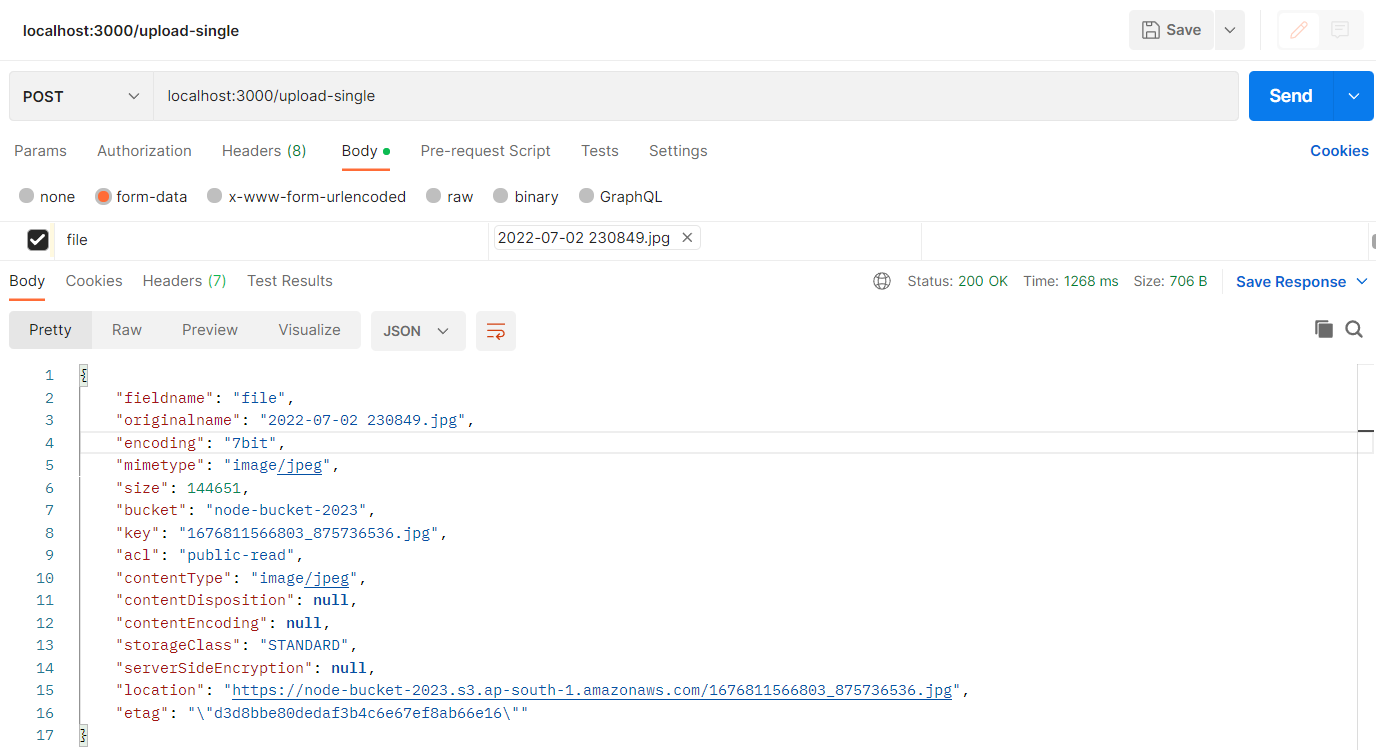

file object

{

"fieldname": "file",

"originalname": "2022-07-02 230849.jpg",

"encoding": "7bit",

"mimetype": "image/jpeg",

"size": 144651,

"bucket": "node-bucket-2023",

"key": "1676811566803_875736536.jpg",

"acl": "public-read",

"contentType": "image/jpeg",

"contentDisposition": null,

"contentEncoding": null,

"storageClass": "STANDARD",

"serverSideEncryption": null,

"location": "https://node-bucket-2023.s3.ap-south-1.amazonaws.com/1676811566803_875736536.jpg",

"etag": "\"d3d8bbe80dedaf3b4c6e67ef8ab66e16\""

}3. Install @aws-sdk/client-s3 packege.

npm i @aws-sdk/client-s34. Install dotenv npm package.

npm i dotenvAfter Installation import dotenv in app.js

require('dotenv').config();5. Create a .env file in the root and add these environment variables.

AWS_ACCESS_KEY_ID=

AWS_SECRET_ACCESS_KEY=

AWS_REGION=

AWS_BUCKET=Checkout- Generate AWS Credentials

Checkout- Create S3 Bucket

6. Create a utils folder and inside this folder create a file s3.util.js

const { S3Client } = require('@aws-sdk/client-s3');

const config = {

region: process.env.AWS_REGION,

credentials: {

accessKeyId: process.env.AWS_ACCESS_KEY_ID,

secretAccessKey: process.env.AWS_SECRET_ACCESS_KEY

}

}

const s3 = new S3Client(config);

module.exports = s3;7. Create a helpers folder and inside this folder create a file upload.helper.js

const multerS3 = require('multer-s3');

const multer = require('multer');

const path = require('path');

const s3 = require('../utils/s3.util');

const upload = multer({

storage: multerS3({

s3,

acl: 'public-read',

bucket: process.env.AWS_BUCKET,

contentType: multerS3.AUTO_CONTENT_TYPE,

key: (req, file, cb) => {

const fileName = `${Date.now()}_${Math.round(Math.random() * 1E9)}`;

cb(null, `${fileName}${path.extname(file.originalname)}`);

}

})

});

module.exports = upload;8. Create a controllers folder and inside this folder create upload.controller.js

controllers/upload.controller.js

const upload = require('../helpers/upload.helper');

const util = require('util');

exports.uploadSingle = (req, res) => {

// req.file contains a file object

res.json(req.file);

}

exports.uploadMultiple = (req, res) => {

// req.files contains an array of file object

res.json(req.files);

}

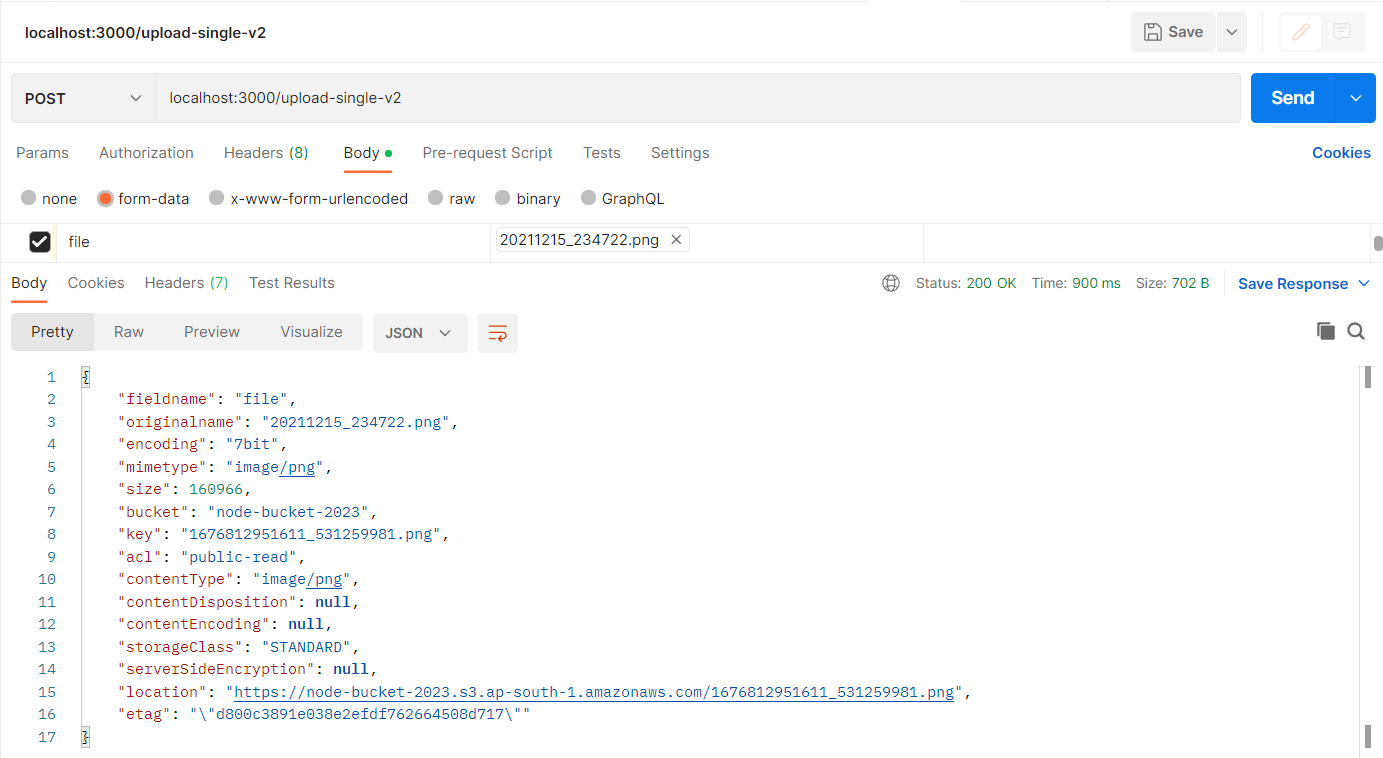

exports.uploadSingleV2 = async (req, res) => {

const uploadFile = util.promisify(upload.single('file'));

try {

await uploadFile(req, res);

res.json(req.file);

} catch (error) {

res.status(500).json({ message: error.message });

}

}6. Update routes.

routes/index.js

const express = require('express');

const router = express.Router();

const upload = require('../helpers/upload.helper');

const uploadController = require('../controllers/upload.controller');

router.post('/upload-single', upload.single('file'), uploadController.uploadSingle);

router.post('/upload-multiple', upload.array('files', 5), uploadController.uploadMultiple);

/* ------------------------ upload and error handling ----------------------- */

router.post('/upload-single-v2', uploadController.uploadSingleV2);

module.exports = router;

7. Start the project.

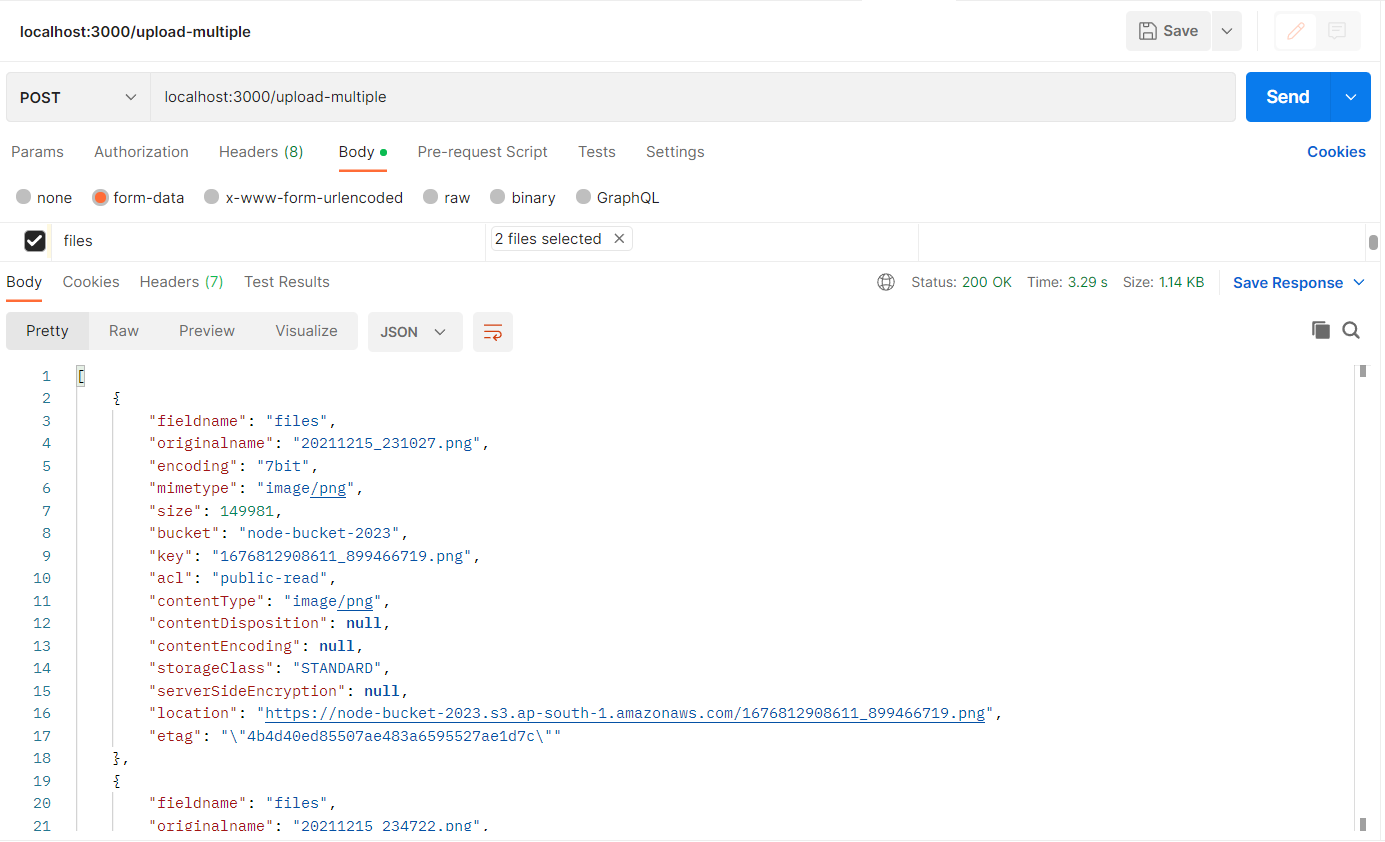

npm startFinally Open Postman or any REST API Client.

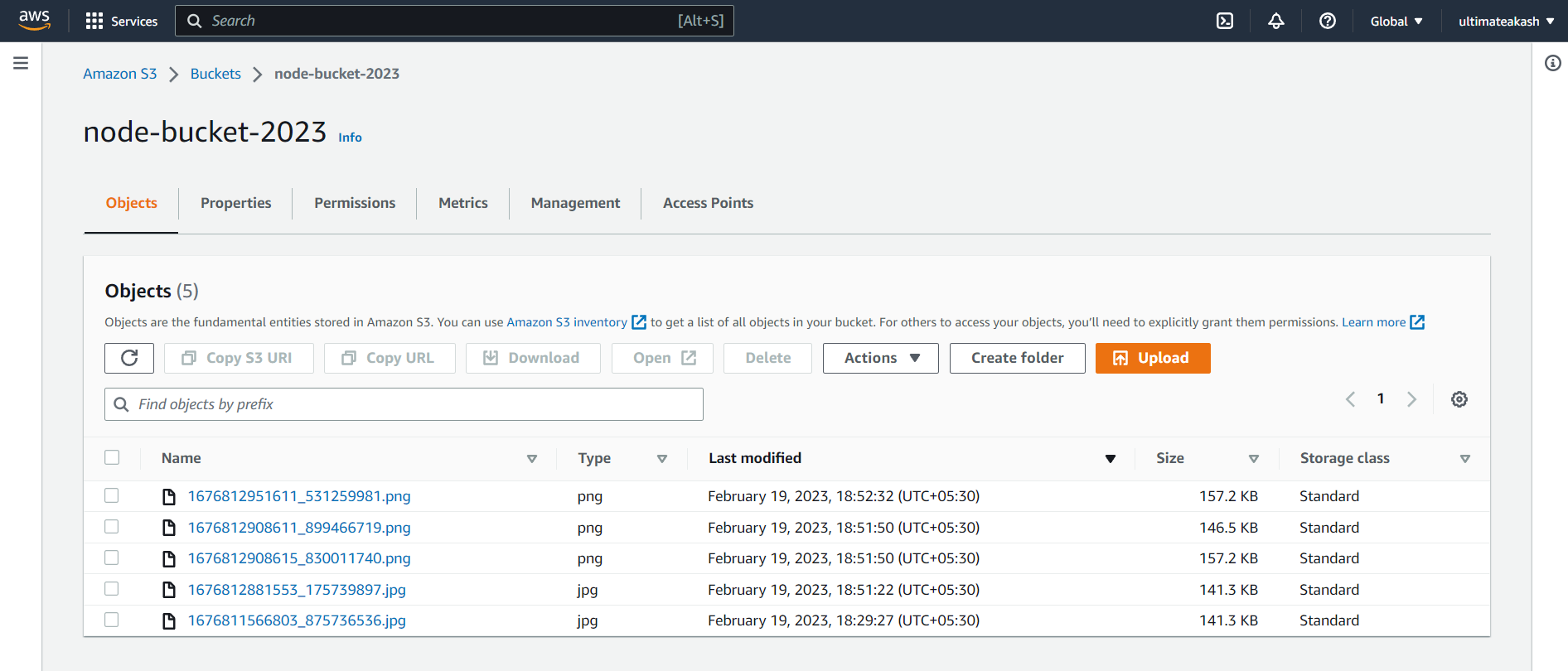

# Delete a File

const s3 = require('../utils/s3.util');

const { DeleteObjectCommand } = require('@aws-sdk/client-s3');

const command = new DeleteObjectCommand({

Bucket: process.env.AWS_BUCKET,

Key: '1676811566803_875736536.jpg'

});

const response = await s3.send(command);# Delete Multiple Files

const s3 = require('../utils/s3.util');

const { DeleteObjectsCommand } = require('@aws-sdk/client-s3');

const command = new DeleteObjectsCommand({

Bucket: process.env.AWS_BUCKET,

Delete: {

Objects: [

{

Key: '1676812951611_531259981.png',

},

{

Key: '1676812908615_830011740.png',

},

]

}

});

const response = await s3.send(command);Note:- Key is the file name.

# Dynamic Bucket Name

const upload = (bucket) => multer({

storage: multerS3({

s3,

bucket,

acl: 'public-read',

contentType: multerS3.AUTO_CONTENT_TYPE,

key: (req, file, cb) => {

const fileName = `${Date.now()}_${Math.round(Math.random() * 1E9)}`;

cb(null, `${fileName}${path.extname(file.originalname)}`);

}

})

});Now you can pass bucket name in the upload function.

router.post('/upload-single', upload('my_bucket').single('file'), uploadController.uploadSingle);

https://github.com/ultimateakash/node-s3-upload

If you facing any issues. don't hesitate to comment below. I will be happy to help you.

Thanks.

Leave Your Comment